The Ultimate Guide to Executing a Creative Testing Framework

As marketers, we know that what worked last week might not work this week. The digital marketing world is one that is constantly evolving. Having the ability to develop and execute a creative testing framework that generates fast and effective results is crucial to keeping up with the competition–and your audience. In this guide, we will explore the fundamental steps and strategies involved in developing a creative testing framework on Meta. Our goal is to equip you with the knowledge and tools needed to unlock the full potential of your ad creative!

Why is creative testing important?🧪

Creative testing is one of–if not THE–most important tools growth marketers have in their belt. It’s absolutely necessary for uncovering valuable insight into what resonates with our target audience and what does not. Zeroing in on what works leads to higher-quality creative and, more importantly, better overall campaign performance. So let’s start at the beginning. The first step in developing any creative test is establishing a creative testing framework, which provides a clear and concise roadmap when it comes time to execute. The process requires insightful research, careful planning, and strategic rollout. For this, we follow the scientific method.

Research and Hypothesis🔬

All good experiments start with a hypothesis. To come up with a hypothesis, you need to do research and gather insights. The best way to start is by doing a creative analysis of historical performance. Comparing the data of top-performing creative and bottom-performing creative allows us to establish clear distinctions and identify aspects to test easily.

Based on our research, we wanted to determine if this was just a coincidence or does the founder being pictured in creative concepts really have an effect on performance. This led us to our hypothesis: “Will creative concepts with the founder in it drive a higher CTR, and does a higher CTR result in a higher volume of purchases?” To test the hypothesis, we developed three creative assets with the founder being shown in different capacities:

- One video without the founder

- One video starting with the founder

- One video with the founder throughout the entire video

Establishing your North Star🌟

Once we have our hypothesis, we plan how we are going to measure success. Establishing a “north star” is vital in determining if the test was successful or not.

🙇 Example: For this test, our north star would be CTR and the volume of purchases.

At this step, establishing historical benchmarks of the ‘north star’ metrics you are using can also be beneficial. This allows you to see how the winning creative concept stacks up against previous ad performance. This is not a necessary step, but it adds valuable insight to help further qualify the success of your test.

Asking the important questions 🤔

Now that we have our hypothesis and have determined the metrics that will determine if this test is successful, it’s time to get to the nuts and bolts of how this comes to life inside of your ads manager. There are several questions that need to be answered: How much budget do I allocate to a creative test? How long do I run a creative test? What audience am I going to use? If you find yourself asking these same questions, you’ve come to the right place! ⬇️

🙋🏽 How much should I spend on a creative testing framework?

When thinking about setting a budget, you have to keep in mind that this is a test! Some creative concepts will be fruitful while others… not so much. We recommend taking 10-15% of the monthly budget to use as a test budget. This will allow you to test and gather learnings quickly without risking your entire budget.

🙋🏽 How long should I run a creative test?

The duration of the test hinges on two main factors; how large the 10-15% of your budget is and your audience size. A larger budget paired with a large audience might be able to generate enough data over the span of 7 days, thanks to heightened exposure and interactions, leading to faster statistical significance. In contrast, a modest budget with a smaller audience might require a 2-week test duration to gather sufficient insights due to slower data collection and a limited user pool. Achieving the perfect balance between budget, audience size, and test duration is important for obtaining actionable results and maximizing the impact of our advertising endeavors. As a general rule of thumb, we’d recommend running a test for no less than 7 days and no longer than 2 weeks.

🙋🏽 How do I pick the right audience?

Picking the right audience to target is a key factor in the overall success of the test. We want to be able to gather fast and effective results, so selecting a new audience or an audience further down the funnel that is on the smaller side might take longer to gather quick learnings. Leveraging a top-performing upper-funnel audience has two main benefits: Its size and historical data. A larger upper-funnel audience allows you to gather information faster due to the fact that you can reach more users. In addition, a top-performing audience ensures that you are targeting your ideal ICP that historically converts. An added bonus of using a top-performing audience is being able to look at historical data and create a benchmark for what the audience’s performance is when not testing.

Time to Build👷

Building out the campaign structure is the most important part of the entire testing process. There are tons of different ways to go about this, but we’ll share what Tuff has found to work the best!

Start by setting up one single campaign, this is where the test will live. The ad set level is where we really set up the test. You will want to set up one ad set with the daily budget and audience you have selected. Once you have that setup, you will want to duplicate this ad set to match the number of creative concepts you are testing. After that, you will build out your ads, each ad set will house one variation of the ads. The final step is navigating over to the A/B testing tool feature in Meta and selecting the number of ad sets you want to test against each other. At this point, you have completed the build, and your test is ready to go live!

What are the benefits of this structure?

The A/B testing tool evenly splits your audiences amongst the ad sets you have selected. Once someone is bucketed into an ad set, they will not be served ads from a different ad set. This ensures that there is no audience overlap. Another benefit of this setup is that each piece of creative runs on its own. This ensures that ads are receiving equal budget, and are not competing directly against each other in the same ad set. This clean separation means clean data!

The Results📊

Now that we have successfully developed the creative testing framework and executed the test, it is time to sit back and relax…that may seem funny to hear but it’s the truth! While the test is live we don’t want to make any changes that could affect the results.

After the test has concluded, the next step is to analyze the performance. At this point, you will want to make sure you are looking at the metrics you established as your “north star” to determine the winning creative concept. Ultimately we want to determine if the data validates your hypothesis.

To further qualify the winning creative concept you are going to want to compare it to historical benchmarks. This will give you additional insights into just how successful this test was in relation to overall account performance. These results leave you with a new creative concept to rotate into your existing campaigns as well as valuable insights to help develop high-performing, data-backed creative concepts moving forward!

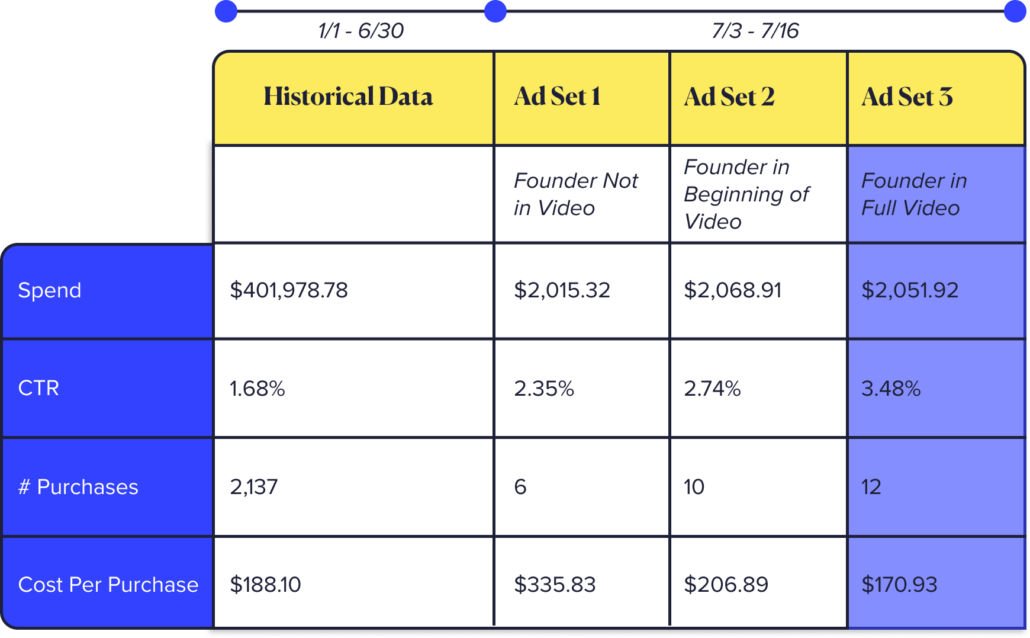

Campaign Results

Example data table presenting the click-through rates (CTR) and purchases for 3 ad set variations. Ad Set 3 received a higher CTR, leading to 18% more conversions compared to Ad Set 2.

Takeaways

Hooray! We validated our hypothesis that concepts with the founder would lead to a higher CTR and more purchases. Ads with the founder in the full video were crowned the winner, with a 23% higher CTR and 18% more purchases than the runner-up. 👑

Bonus! Compared to historical data, our founder video outperformed our average CTR by 69% and resulted in a 9% cheaper cost per purchase.

Based on this analysis, we can confidently conclude that the concept with the founder in the full video is something that resonated strongly with our target audience. Given the statistical significance we saw comparing the winning concept to our historical benchmarks, we know that at scale this concept should generate above-average performance in our other campaigns. To build on our success, we rotated the winning concept into our other campaigns. We’ll also use our findings to inform future rounds of creative.

Recap

In conclusion, creative testing is an important tool to help navigate the ever-changing landscape of digital marketing. Creating and executing a creative testing framework involves insightful research, careful planning, and strategic execution. Leveraging the testing framework above will allow you to do just that! So go on and uncover valuable data, enhance your creative, and catapult your campaign performance. Need a hand in getting started? Tuff’s got your back.